Systems Thinking — Stocks, Flows, and Feedback Loops

Res Extensa #6 :: Thinking in Systems, complexity, emergence, and why systems approaches are valuable in business

We're coasting right through the holiday season. School has let out for a couple of weeks, so the kids are at home (back in chaos mode around here). Seeing the vaccines making their way into the world is a great Christmas present for all of us — anything that has us closing the book on 2020 on a note of positive progress is welcome!

The development of these vaccines is fascinating on several levels. Not only did multiple companies aggressively pursue a solution to our shared global crisis, but they also embraced new techniques that have implications for drug development writ-large from here on out. The mRNA approach for therapies looks to hold promise not only for antivirals, but also for other disorders and chronic diseases. The idea of genetically-tailored, personalized cancer vaccines is just mind-blowing. Stack all that up with the recent breakthroughs coming out of DeepMind with their AlphaFold project, and 2020 doesn't look so bad for technological development.

What we haven't done well in 2020 is manage crisis. Our COVID response (worldwide, but acutely in the US) is a perfect demonstration of our innate inability to understand complex systems. As a rule, it takes us a long time and a lot of error to figure out how little we really know. The panoply of human biases is on display as we muddle our way through policy changes, data analyses, overreactions, underreactions, and every other thing, without being able to rein in chaos.

Chaos and Complexity

It was James Gleick's aptly-titled book that first got me interested in complexity and its impacts that make fields like meteorology, evolution, sociology, or predicting financial markets wicked domains that we struggle to model and unwrap. Fundamentally, what makes things like weather systems resistant to our attempts to model them is an intractable volume of data, piped through compounding feedback loops, composed of far too many variables to accurately predict.

I've read several books since that dovetail with and expand on this idea: that complexity lives all around us, how failing to model this reality often causes us to misunderstand it. In economics you've got the work of Sowell and Hayek, Geoff West's Scale in biology, McWhorter on linguistics, and even Jeff Hawkins's work on how brains develop. And of course Nassim Taleb's entire bibliography. All of these involve collisions between complex domains and our inability to model them effectively.

We naturally view the world in simplified, localized, cause-and-effect style models. In our efforts to distill unmanageable quantities of variables, we have to ignore or "hold constant" things that are, in reality, never constant.

Systems Thinking

A better way to view the world is as a network of interconnected systems. Since I've found myself on this thread so frequently the last few years, I picked up Donella Meadows's book on the topic: Thinking in Systems. She gives a great overview of systems theory and its applications, showing many examples of how to unwind complex systems and see their relationship structures, even if quantifying those measures is still difficult.

In the theory, we view the world as an interlocking web of "stocks and flows", where dozens (or hundreds, or thousands) of individual feedback loops interleave with one another, demonstrating the nonlinear output of self-reinforcing cycles or the tempering effect of balancing loops. The book is full of diagrams like this breaking down the stocks and flows in various types of systems:

And even these types of schematics are reductionist models of what the real ecosystem looks like (this example shows the harvest of a renewable resource, like logging timber). But this approach to thinking helps you view the world in a multidimensional, nonlinear way, and to get out of the mode of overly-simplistic, single cause-and-effect structures.

Defining and Differentiating Systems Thinking

While reading about it, I thought about how to uniquely differentiate the systems thinking worldview. What is its opposite? One source uses "linear thinking." Another uses "reductionist" vs. "holistic" terms to contrast the two sides. I like linear thinking since it implies a level of simplicity that complex systems always defy, and it feels particularly descriptive when you stack up their contrasting properties:

Systems thinking is defined by:

Multivariate influences — many-to-many relationships, nesting

Complexity — interlocking feedback loops; intractable outcomes with many layers of interaction

Nonlinearity — compounding; outputs scaling with "stock" volumes

Focus on behaviors — dynamic behaviors and tendencies drive outputs

Fuzzy boundaries — boundaries between components are blurry and shifting

Whereas linear thinking by:

Cause and effect — simple one-to-one feedback loops

Simplicity — computable, predictable outcomes

Linearity — fixed; unchanging constant flows

Focus on events — frozen-in-time individual events drive outputs

Clean boundaries — stark boundaries between elements

This is not to imply that "linear thinking" comes from lack of intelligence, or that it makes you incapable of appreciating these factors. Modeling the world at larger scales (like economies, biomes, multinational organizations) isn't a natural act; it's very much a modern problem. No one in 800 AD was worried about understanding global climate patterns. All problems were much simpler and local to surroundings.

I think there's a good analogy here from physics (at least in terms of why we continually make errors, with linear solutions to complex problems): the comparison between Newtonian and relativistic mechanics. The first model works well at human scales: gravity is constant, the speed of light is instant, space and time are independent. The other is the truer model of reality: gravity is relative, the speed of light is bounded, space and time are inseparable. But in day to day life that we can perceive, Newton's model works fine.

Systems theory is similar — at individual human behavior scale, direct cause and effect is a pretty good way to make decisions. If I'm an ancient Eurasian nomad and I'm hungry, I don't need to think in terms of entire food chains, sustainability, restocking, over-harvesting. I just find something nearby and consume. Life goes on, my tribe survives another day.

For today's complex world, systems thinking is the appropriate method, though. It forces you to analyze second- and third-order impacts of decisions in a way that's easy to paint over with simplistic modeling. I'm reminded of the classic high school physics problem qualifier: "assume no friction, assume no gravity". It's great for teaching the relationship between force and motion, but this temptation to oversimplify and cleanly model creates massive problems when we use it liberally in real life.

Application in Companies, Businesses, and the Workplace

In companies we find ourselves continually in need of models to predict things for us: markets, competitive landscapes, staffing requirements, what product to build, cost-effective customer acquisition. We operate in ever-fluid environments of unknowns. And as with all man-made systems, we're trying to design the most effective, efficient way to deploy resources. There's a useful descriptor for this that comes from Lionel Robbins, who defines the central concern of economics as:

The allocation of scarce resources which have alternative uses

These two factors also tie nicely to the fundamental blocks of systems: scarce resources (stocks) with alternative uses (flows).

The loops in a system allocate or return varying quantities of resources to different subsystems. "Alternative uses" are the trade-offs you make in how you manage those allocations.

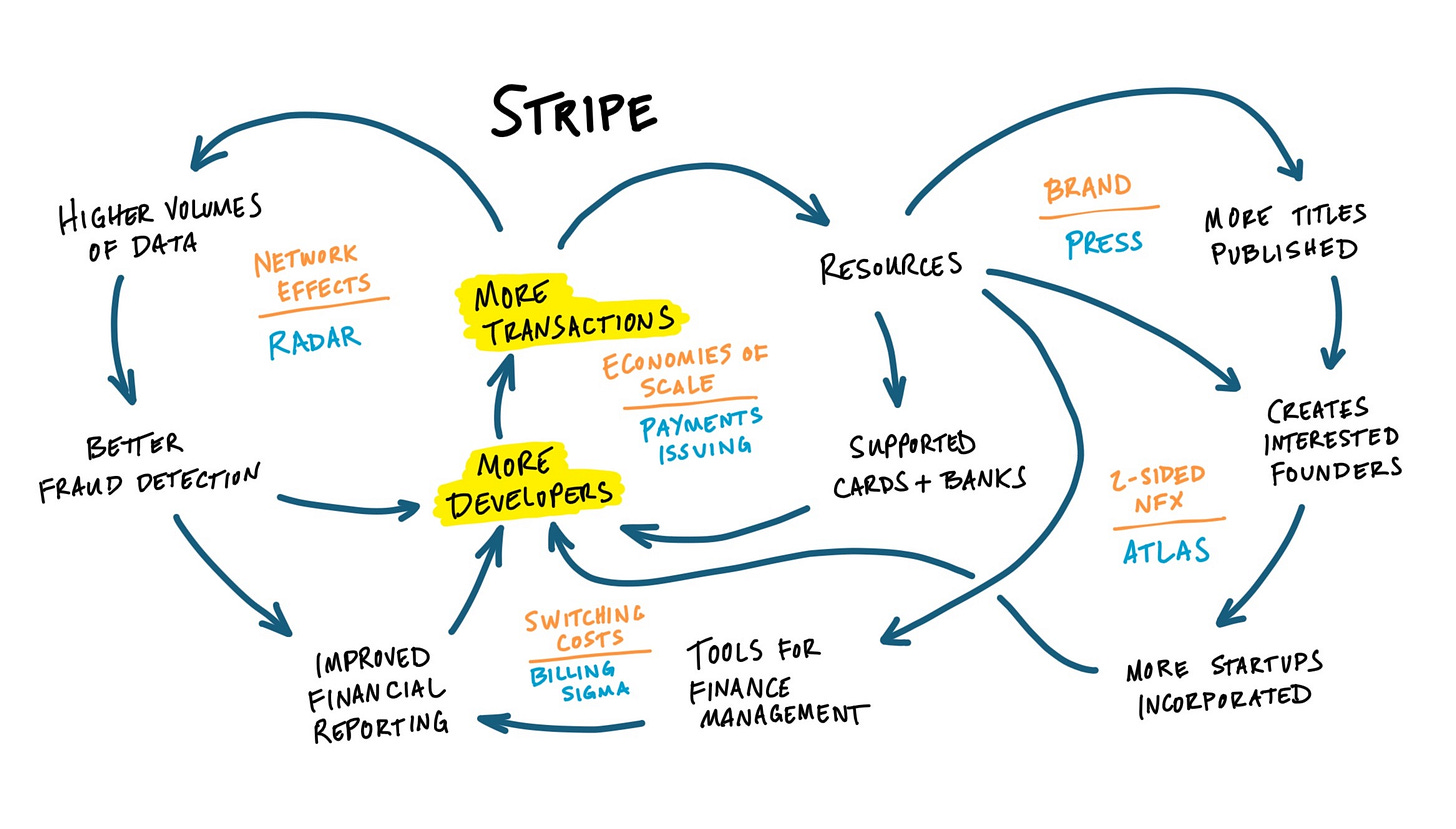

My favorite businesses to learn from all have strong systems thinking mindsets. Many companies in the tech space fall into this category of systems orientation — Stripe, Shopify, Amazon, Netflix, and others. They've built cultures around this sort of thinking, resulting in a resilience, modularity, and decentralization that's required to build healthy, long-term businesses.

Listen to Shopify's CEO Tobi Lutke talk about the company and his mindset in building it. The systems thinking is plain as day. If you read any interviews with him, you'll hear about his interest in video games as a learning medium — how they teach you to allocate resources, distribute your attention, and make quick decisions with incomplete information. He commonly references the real-time strategy game Starcraft as a proving ground for refining your skills at attention-management.

Another game you'll hear Tobi reference is Factorio, a simulation game in which you build massive factories starting with primitive tools and natural resources. During the game as your factory gets larger, you have to manage the the throughput of systems as you automate steps and interconnect production lines: from mined ore to refined metal to machine parts to manufacturing equipment, all of which need fuel, which discharge pollution to mitigate, and on and on. Watch some videos of players' large factories and you'll see the relevance to systems thinking. Zooming in on these large systems exposes individual subsystems, constraints, and feedback loops. And just as with the Starcraft example, when things get complex, your attention becomes more and more precious, requiring you to dole it out effectively. Factorio lets you zoom big picture and see the larger-scale impacts of small changes to interconnections.

I really like this focus on attention as the scarcest resource. I've sat in hundreds of planning meetings talking about strategy, budgets, and other big business decisions and almost never is attention mentioned directly as a key resource. Sure if you're advocating developer time to build a specific product feature, that's attention. But I find it a useful construct to think of all of that time in the day as part of the attention pool. Most businesses end up plowing enormous quantities of aggregate attention into overhead, meetings, research efforts — many more things than we typically realize. I loved this anecdote from Sriram Krishnan's recent interview with Tobi, talking about the time he programmatically deleted all recurring meetings from Shopify calendars:

We found that standing meetings were a real issue. They were extremely easy to create, and no one wanted to cancel them because someone was responsible for its creation. The person requesting to cancel would rather stick it out than have a very tough conversation saying, “Hey, this thing that you started is no longer valuable.” It’s just really difficult. So, we ran some analysis and we found out that half of all standing meetings were viewed as not valuable. It was an enormous amount of time being wasted. So we asked, “Why don't we just delete all meetings?”

Recurring meetings are just one of many examples of the creeping temptation to burn attention in ways that eventually become totally wasteful. Yet we do it all the time.

Stripe also exudes thinking in big-picture systems. They've become well known for the strong culture of reading and writing they've built. They even launched a magazine and a book publisher to show how serious they are about fostering learning (even outside their own company!).

This type of thinking is paramount to build a resilient learning organization. If you can make your company adaptable to how it responds to stimulus, rather than fixing what its response will be, you'll have a more dynamic and long-term-viable company. Considering we all have to operate in a perpetually ambiguous environment, setting up rigid, formalized, predictable structures might make your organization easier to understand (you can draw a nice clean org chart and write big SOP docs), but that might ignore how well it seeks and reaches wider global maxima.

Legibility, Revisited

Lastly I wanted to briefly recall James Scott's notion of "legibility" from his epic Seeing Like a State, an idea I jammed on in RE #4.

It's interesting to me the level of similarity here between systems mindsets and Scott's critiques of "high modernism". Systems thinking assumes constraints, complexity, and emergence and counsels you to live within those bounds. Whereas the high modernist project attempts to simplify the unsimplifiable. Our desire for legibility compels us to ignore aspects of complex systems in service of simplification.

Our ability to predict, model, and understand is limited by what we can measure, and in complex, interconnected systems there are always many variables we can't accurately measure. Emergence is a theme that undergirds many interesting ideas like this. Emergent properties are by definition not measurable because they don't exist at the time we're making measurements.

The answer isn't a binary "ambiguity is bad" or "ambiguity is good." Ambiguity is an omnipresent fact of life. We should spend time figuring out how to work within those constraints rather than attempting to control them.

As always, thank you for reading. If you’ve enjoyed this, forward it onto your friends and colleagues or subscribe yourself if you haven’t. Let me know what you think of the newsletter so far! Also, check out my blog for more of my writing, or follow me on Twitter.